Dec 28, 2022 Tags: oss, rant, security

This post is at least a year old.

Caveat: These are my personal opinions, not the opinions of my employer or any open source projects I work on or with.

I wrote this post back in October, but shelved it for being too circuitous and spending too much time on background (like explaining what ReDoS is). This is my attempt to cut it down to just the pithy (read: ranty) bits, spurred on by the continued appearance of mostly useless ReDoS CVEs.

TL;DR: ReDoS “vulnerabilities” are, overwhelmingly, indistinguishable from malicious noise:

The proliferation of ReDoS vulnerabilities is the product of badly misaligned incentives in separate but related domains: vulnerability feeds and supply chain security vendors.

Vulnerability feeds are not even remotely new: NIST’s CVE feed is (more than) old enough to drink, and had been a core part of automating reporting and triage since its inception.

Having your vulnerability appear in a vulnerability feed has historically services has both practical and political benefits:

Practical: being assigned a vulnerability ID means that your vulnerability appears automatically in all sorts of reporting and remediation systems, reducing the amount of manual effort needed to spread awareness of the underlying problem.

Political: vulnerability feeds are public, and thus can be used to apply public pressure towards getting a vulnerability fixed or, at the least, acknowledged by the responsible vendor. Someone with a vulnerability ID can (in principle) walk into their executive’s office and offer proof that the public knows about an embarrassing weakness, and that it’s therefore in the company’s interest to address it as soon as possible.

As a consequence of those benefits, there’s a natural third use that arises for vulnerability feeds: clout. Security researchers love being assigned CVEs for their work1; they put it on their resumes and in their talk slides as evidence of their prowess and domain knowledge.

There’s nothing necessarily wrong with this: it’s essentially a seal of quality! But it does introduce a perverse incentive: when public acknowledgement of a report is itself seen as a sign of quality, any report will do. This in turn means a search for easy things to report, and it doesn’t get much easier than ReDoS2.

“Software supply chain security” is really hot right now: there are dozens of startups3 vying for attention (and public/private money) in the domain.

Most are selling small variations on the same underlying product4: a tool or other integration that scans software dependencies and reasons about them, usually to the end of producing an SBOM or other machine-readable report for subsequent analysis and remediation5.

Consequently, the need for differentiation is high: these companies need to set themselves apart from their cohort, in an ecosystem that’s otherwise difficult to differentiate in. Thus our second misaligned incentive: discovering volumes of low-quality “vulnerabilities” and churning out breathless blogspam for each.

This misaligned incentive predates on other misalignments:

Technology (and especially security) journalism that optimizes for

eye-poppers (Massive typo-squatting attack on $INDEX! $STARTUP finds over

70 critical6 vulnerabilities in open source projects!) over impact

analysis (How many squatted package copies were actually downloaded? Why

aren’t these “critical” vulnerabilities being exploited if they’re critical?).

Asymmetric maintenance and response burden: it’s easy to submit a ReDoS vulnerability report to an open source project. Remediation is another matter: even simple patches (which, blessedly, ReDoS remediations tend to have) require serious maintainer attention in ecosystems where even tiny changes can produce breakages for millions of downstream users.

In other words: blasting open source projects with low effort ReDoS “vulnerabilities” requires maintainers to make significant time commitments, commitments that would otherwise be allocated towards feature development, stability, other security tasks, or just about anything else ordinarily needed to keep a project running and useful. It’s a demand for another slice of maintainers’ time and resources, one that’s difficult to decline lest they be tarnished as “not taking security seriously.”7

I’ll sum this point up with a thought experiment: let’s say someone submits a patch for a “high” severity ReDoS in a popular tool and, in the process, accidentally introduces a bug that causes failures on millions of machines until a fixed patch can be rolled out. Which was worse: the ReDoS or the actual DoS?

So far, this has culminated in a culture of “worse is worse”8 among software supply chain vendors9: vendors have been incentivized to “shoot first and ask questions later,” with no regards for report quality or increased burden on open source maintainers10. This is not sustainable in the long term!

I’ll just be blunt about it: 99.9% of developers do not care about ReDoS “vulnerabilities,” and they’re right not to care. That includes in web development, one of the supposed key targets for ReDoS (and thus audiences for remediation).

I will not make the claim that every single ReDoS “vulnerability” is pure junk11. Instead, I’ll say that every single ReDoS “vulnerability” that I’ve ever seen has been pure junk, and has fallen into one of two categories:

“Completely irrelevant”: domains like developer tooling, command line tools for data processing, &c: here, a ReDoS “attack” manifests as a hung command-line program. The user interrupts it, debugs it, and goes on with their life. At no point is any security property of the system violated.

“Spicy DoS”: domains like web development, protocol design, &c: here, a ReDoS “attack” manifests as a waste of compute resources, theoretically taking the service down with excess load. Except, of course, that no attacker bothers to do this: it’s simpler, easier, and just as effective to perform a DoS the “good old fashioned” way.

This is even before any of the really cheap shots, like observing that the entire bug class is based on unreliable premises: that there are no timeouts or resource limits anywhere else in the system (almost always false, particularly in web development), and that the regular expression engine itself is susceptible to pathological runtime behavior (plenty aren’t).

Like every rule, there are exceptions: I am positive there are instances where being able to cause a Denial of Service through a regular expression violates some important security property. But there is no public evidence whatsoever that these instances warrant the noise, make-work, and consequent fatigue that their reports induce.

Security fatigue is badly overlooked in the security tooling community; I could12 write an entire separate post on it.

Instead, I’ll make it pithy: your vulnerability reports and tooling don’t matter a single bit unless a responsible party cares about them. The single easiest way to get the responsible party to stop caring is to make it easier to not care13 than to care.

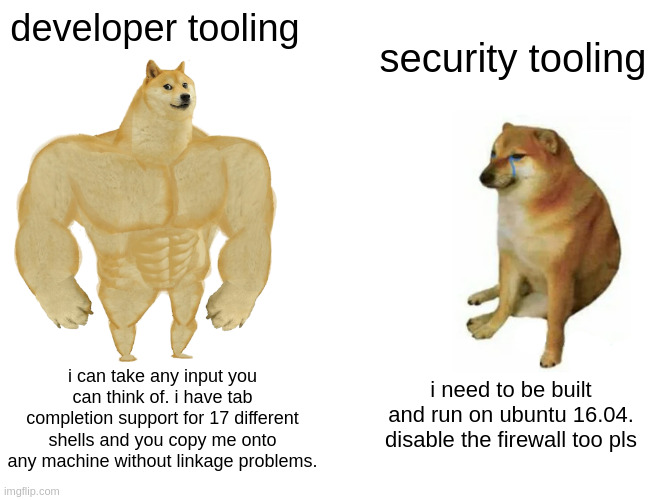

Programmers want to be delighted by their tools: they like tools that do the right thing by default, that nudge (but don’t prod) them in the right direction, that integrate into their existing workflows and want to be integrated, rather than demanding unique treatment. The best tools reduce the amount of work an engineer needs to do; the second best tools don’t change the volume of work but make it more enjoyable.

Security tools are, overwhelmingly, not delightful: they’re bespoke and picky about their inputs and runtimes, require their own dedicated workflows, and, most important of all, create work that didn’t exist before for reasons that are opaque and unaccountable without domain experience14.

(Pictured: how your developers see the security tooling you bought for them.)

(Pictured: how your developers see the security tooling you bought for them.)

ReDoS and other junk “vulnerability” classes make a bad situation worse: they add more fatigue to already fatigue-inducing tools by requiring developers to mentally correct for over-scored vulnerabilities, to wade through the cognitive dissonance of targeting a metric (no vulnerable dependencies!) versus their domain experience (this vulnerability is fake!), &c &c.

And finally, as I’ve hinted before, the failure mode for a security-fatigued engineer is itself a security problem: engineers who feel like they only respond to useless reports develop the habit of ignoring all reports, including the real ones. When actual critical vulnerability reports roll in, they lose time unlearning all of the muscle memory that bad data instills in them. In this way, the proliferation of ReDoS “vulnerabilities” is itself a Denial of Service against the people and mechanisms that keep software ecosystems secure.

It’s not fair for me to rant without offering solutions. So here are some things we can do to immediately improve the state of affairs:

Security feeds: ban ReDoS vulnerability reports now. The signal-to-noise ratio on ReDoS reports is unacceptable and is a security risk in itself; a blanket ban is a justifiable stop-gap until our reporting and scoring formats can express the nuance necessary for the tiny minority of ReDoS vulnerabilities that actually matter. No serious vulnerability feed accepts the constant stream of bogus beg bounty reports submitted to vendors; ReDoS “vulnerabilities” should be treated with the same default skepticism.

Software supply chain companies: stop juking the stats. There’s enough bad software our there in the world; you shouldn’t have to bump your numbers with low-signal, high-noise “vulnerabilities.” Even better: realign your incentives towards security throughput, rather than security inundation: your goal should be to make consumers of your tooling as productive as possible, rather than burying them in noise as evidence of your own products’ functioning.

Open source developers: just say no. We all know that ReDoS “vulnerability” that landed in your lap (probably from some automated pipeline somewhere) is junk; you can say no (and say it loudly) to fixing it. Vulnerability feeds and vendors get away with chasing their misaligned incentives because, at the end of the day, it’s easier for an open source maintainer to shrug and accept a pointless patch than to employ the actual principles of vulnerability analysis15. The more you say no and complain about this crap, the more pressure the responsible parties feel to realign their incentives towards helping you, rather than achieving throughput statistics that please investors.

At least, when that work is public. ↩

This is not sarcasm: you can find ReDoS “vulnerabilities” with a trivial regular expression. This should beg the question in your mind: what kind of attacker leaves such supposedly valuable and incredibly easy to find vulnerabilities on the table? Unless, of course they really aren’t vulnerabilities at all. ↩

And established businesses. ↩

Or, more accurately, software subscription services. ↩

Automated patching, automated cortisol drip for your DevSecOps team, &c. ↩

The fact that this ReDoS “vulnerability” in wheel can get a “high” severity rating should tell you everything you need to know about the value of our current criticality scoring systems. ↩

To be fair to reporters: there are lots of open source maintainers who don’t take security seriously. And that’s very bad! But the solution here needs to include recognizing that maintainers who otherwise aren’t trained in security are increasingly being asked to tackle increasingly vague threat models (with unrealistic threat ratings). Their skepticism, while perhaps not generally warranted, is understandable. ↩

With apologies to Richard P. Gabriel. ↩

I’m generalizing; there’s a lot of excellent work being done in this space, and any company that I can single out for crapping up vulnerability feeds is probably doing something interesting that I’d otherwise admire. So, in the interest of politeness, I’m simply not going to name names here. ↩

Burden which, again, has actual security and ecosystem stability consequences. ↩

Meaning not meaningfully exploitable, only exploitable insofar as the entire system is exploitable, or so woefully over-scored as to have more comedy than security value. ↩

But won’t, for both your sanity and mine. ↩

Read: less stressful. ↩

From a non-security engineer’s perspective, of course. From our security perspective, that work has always existed and the reasons behind it are straightforward. But they don’t know that, and security tools do a miserable job of surfacing their justifications. ↩

Namely: actually modeling the threat, scoping risks, and performing a cost-benefit analysis on remediation (see: the nightmare scenario with a bad patch above). ↩