Nov 11, 2018 Tags: data

This post is at least a year old.

All of the techniques and data described in this post were used and collected for research purposes only.

Only publicly available information is presented below and in the linked dataset.

Did you know:

.nyc domain?As of 11/3, there are 71,762 active domains1 under the .nyc gTLD2.

The first .nyc domain registered was nic.nyc, unsurprisingly.

I was originally going to run a WHOIS lookup for each domain, but they (the Registry Operators

for .nyc) started limiting WHOIS results

back in May. Oh well.

Instead, I ran DNS queries to see how many domains were currently pointing to servers. Of the 71,762 domains in the dataset, 65,009 have DNS records pointing to one or more IPs or CNAMEs3 4 5.

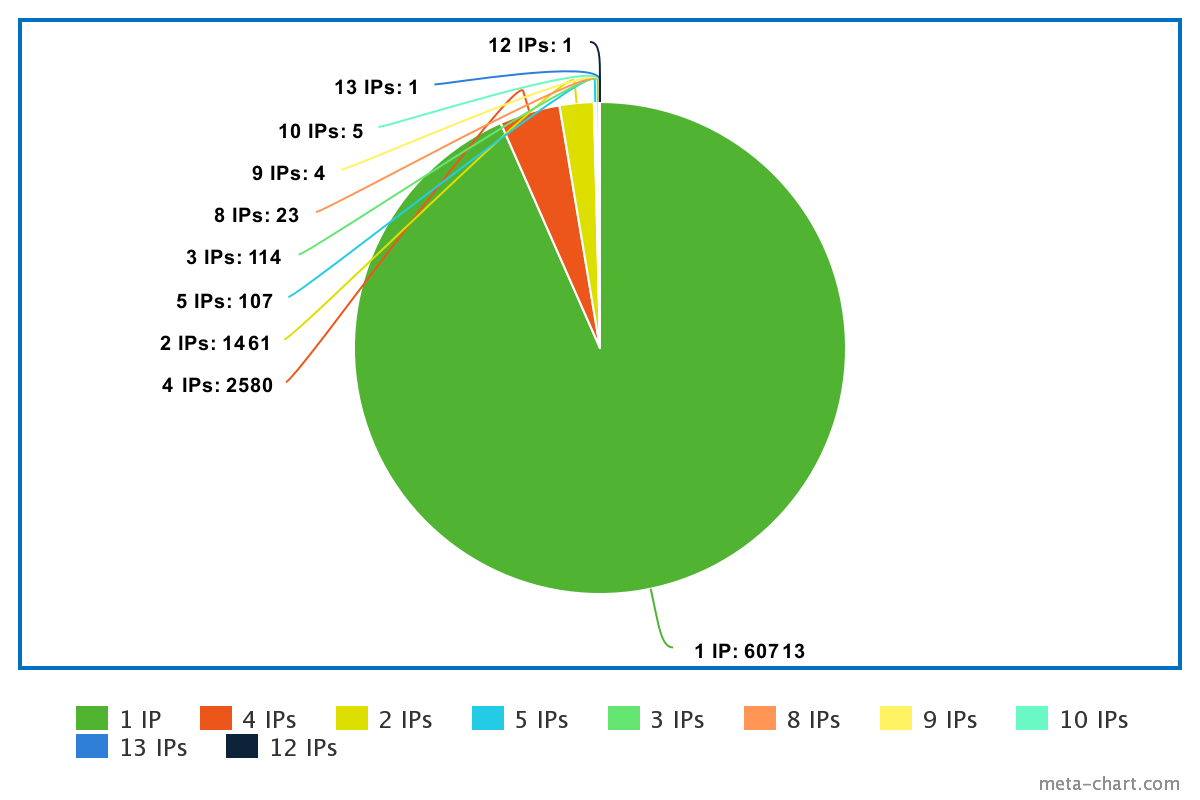

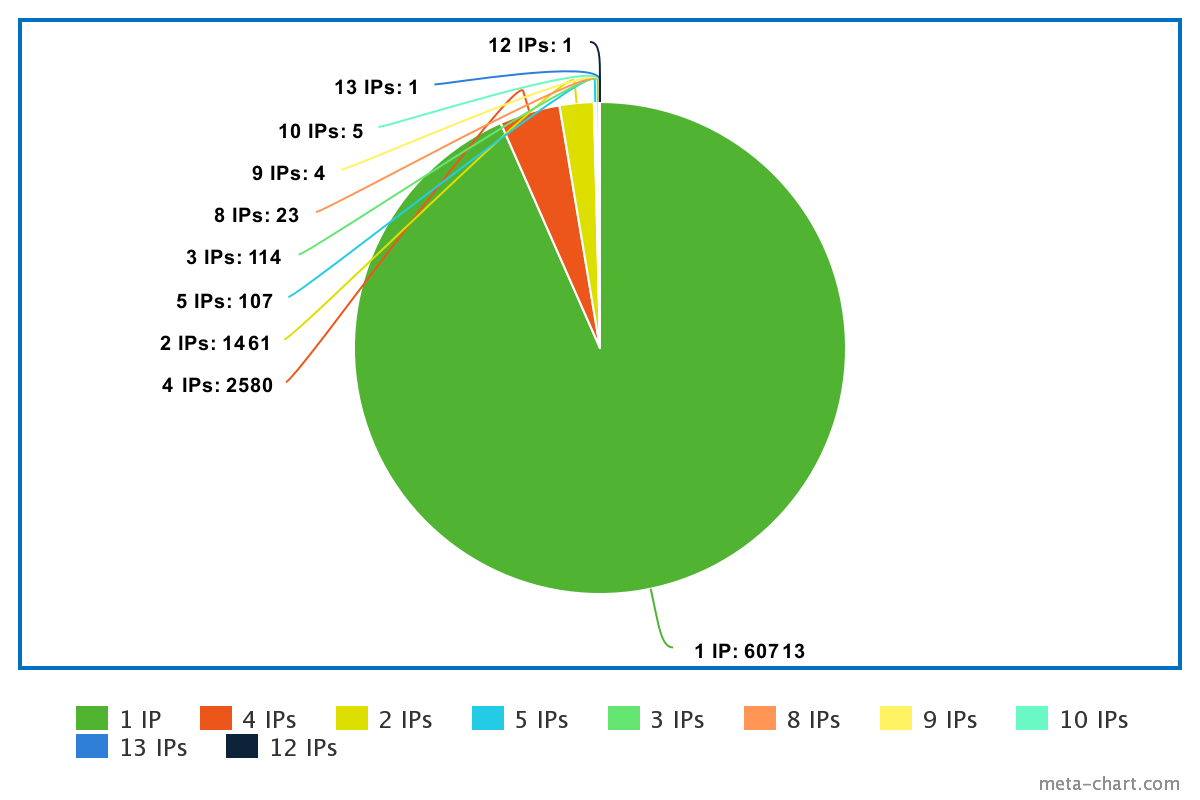

The vast majority of sites have just one IP, while a sizable minority have four (consistently CDN IPs):

Between those 65,009 domains there are 8550 unique IPs6, less than I would have thought.

The top IPs are, unsurprisingly, large CDNs and hosting providers7 8:

| IP | Entity | Number of domains (non-exclusive) |

|---|---|---|

| 184.168.131.241 | GoDaddy | 3289 |

| 208.91.197.27 | Confluence-Networks | 2686 |

| 198.185.159.144 | Squarespace | 2636 |

| 198.49.23.145 | Squarespace | 2499 |

| 198.49.23.144 | Squarespace | 2485 |

| 198.185.159.145 | Squarespace | 2485 |

| 159.8.40.54 | Softlayer Technologies (IBM) | 1611 |

| 23.236.62.147 | 1045 | |

| 54.219.145.76 | Amazon | 759 |

| 184.168.221.96 | GoDaddy | 632 |

Reader: I’d never heard of Confluence-Networks before and couldn’t find any real information about them online, so I’d appreciate any insight you might have.

I decided to run nmap on the remaining 65,009 hosts, checking only for a few common open ports.

This ended up taking about four days in “polite” (-T2) mode:

1

2

3

while read domain; do

nmap -v -p 21,22,80,443 -T2 --append-output -oG nmap.txt

done < livedomains.txt

(nmap -iL <file> does about the same thing as this loop on newer versions of nmap, but my older

version didn’t support domains via that flag.)

This resulted in a big old text file in nmap’s “greppable” format (protip:

do not use this format), from which I extracted port information9. Here’s the breakdown

for open ports:

| Service | Number of domains | Percent of live domains |

|---|---|---|

| FTP (21) | 6487 | 9.98% |

| SSH (22) | 8021 | 12.34% |

| HTTP (80) | 64216 | 98.78% |

| HTTPS (443) | 17313 | 26.63% |

So, about one out every ten domains on .nyc (a relatively new gTLD, mind you) is still running an

FTP service in 2018. Pretty much every domain is serving HTTP, but only about one quarter are doing

so over a not-completely-insecure channel.

Since the number of SSH and FTP hosts was nontrivial, I decided to fingerprint them10 11. Of the 8021 SSH services discovered during the initial scan, 7982 (99.51%) responded to the fingerprint scan. For FTP, it was 6434 out of 6487 (99.18%).

SSH fingerprinting results (independent columns):

| SSH version | OS version |

|---|---|

| OpenSSH 7.2p2: 1900 | Linux: 4750 |

| OpenSSH 5.3: 1794 | Unknown: 3227 |

| OpenSSH 6.7p1: 1341 | FreeBSD: 5 |

| OpenSSH 6.6.1p1: 706 | |

| OpenSSH 6.9p1: 505 | |

| OpenSSH 7.4: 326 | |

| OpenSSH 7.5: 292 | |

| ProFTPD mod_sftp 0.9.9: 256 | |

| Unknown: 212 | |

| OpenSSH 6.0p1: 156 | |

| OpenSSH 5.1: 140 | |

| OpenSSH 7.4p1: 65 | |

| OpenSSH 6.6.1: 65 | |

| OpenSSH 7.6p1: 42 | |

| OpenSSH 6.2: 33 | |

| OpenSSH 5.9p1: 26 | |

| OpenSSH 4.3: 26 | |

| OpenSSH 7.8: 23 | |

| OpenSSH 7.2: 12 | |

| OpenSSH 5.5p1: 10 | |

| OpenSSH 7.6: 7 | |

| OpenSSH 7.7: 5 | |

| OpenSSH 6.1: 4 | |

| OpenSSH 7.1: 4 | |

| SCS sshd 3.2.9.1: 4 | |

| OpenSSH 7.3: 4 | |

| OpenSSH 7.5p1: 3 | |

| OpenSSH 5.3p1: 3 | |

| OpenSSH 6.4: 3 | |

| OpenSSH 6.6: 3 | |

| OpenSSH 7.9: 2 | |

| Serv-U SSH Server 15.1.1.108: 1 | |

| OpenSSH 5.8p2: 1 | |

| OpenSSH 5.4p1: 1 | |

| OpenSSH 5.2: 1 | |

| OpenSSH 5.8: 1 | |

| OpenSSH 4.7: 1 | |

| OpenSSH 7.3p1: 1 | |

| OpenSSH 4.8: 1 | |

| OpenSSH 5.9: 1 | |

| OpenSSH 5.5: 1 |

Some interesting outliers there: I had no idea that ProFTPD had SFTP support, or that people actually used SSH Communications Security’s proprietary SSH server. Serv-U appears to be another proprietary SSH offering.

26 hosts are running OpenSSH 4.3, which had a remote DoS (and potential ACE) all the way back in 2006. Only 765 hosts are running OpenSSH >= 7.4, the first version to fix CVE-2016-10708, a trivial DoS.

And for FTP (independent columns):

| FTP version | OS version |

|---|---|

| Unknown: 5410 | Unknown: 4542 |

| ProFTPD 1.2.10: 735 | Unix: 1761 |

| vsftpd 3.0.2: 69 | Windows: 130 |

| ProFTPD 1.3.5b: 44 | NetBSD: 1 |

| ProFTPD 1.3.5d: 39 | |

| vsftpd 2.0.8 (or later): 24 | |

| vsftpd 3.0.3: 16 | |

| vsftpd 2.2.2: 13 | |

| ProFTPD 1.3.4a: 8 | |

| ProFTPD 1.3.6rc2: 8 | |

| FileZilla ftp 0.9.41: 7 | |

| ProFTPD 1.3.5rc3: 7 | |

| WU-FTPD or Kerberos ftpd 6.00LS: 6 | |

| ProFTPD 1.3.5: 6 | |

| ProFTPD 1.3.5a: 6 | |

| ProFTPD 1.3.3g: 5 | |

| ProFTPD 1.3.4c: 4 | |

| tnftpd 20100324+GSSAPI: 3 | |

| ProFTPD 1.3.4e: 3 | |

| vsftpd 2.0.5: 3 | |

| FileZilla ftp 0.9.39: 2 | |

| ProFTPD 1.3.3e: 2 | |

| ProFTPD 1.3.3a: 2 | |

| Serv-U ftpd 15.1: 1 | |

| vsftpd 2.0.7: 1 | |

| WarFTPd 1.83.00-RC14: 1 | |

| ProFTPD 1.3.5e: 1 | |

| vsftpd 2.3.2: 1 | |

| Gene6 ftpd 3.10.0: 1 | |

| FileZilla ftp 0.9.48: 1 | |

| FileZilla ftp 0.9.43: 1 | |

| ProFTPD 1.3.2e: 1 | |

| FileZilla ftp 0.9.33: 1 | |

| Serv-U ftpd 12.1: 1 | |

| ProFTPD 1.2.8: 1 |

Note: “Unknown” above reflects that nmap couldn’t identify both the product name and

its version — 3508 of those “Unknown” FTPDs are actually Pure-FTPd, making it by far

the single most popular FTPd on .nyc.

Some interesting outliers: WarFTPd is a very old Windows FTP server, and I can’t even find an original source for Gene6.

Several hundred hosts are running versions of ProFTPD that may be vulnerable to CVE-2011-4130. Several dozen are running older versions of vsftpd that may be vulnerable to CVE-2011-0762.

To cap things off, I wanted to see how many websites were following security best practices.

To do that,

I ran twa on 1000 randomly sampled domains12 that the

nmap scan indicated had both HTTP and HTTPS available13. For simplicity’s sake, I only

ran twa on the base domain, not www or any other common subdomains.

1

2

3

4

5

6

while read domain; do

TWA_TIMEOUT=2 twa -c "${domain}" | tee -a twa.csv

done < randomhttps.txt

# remove the CSV headers

sed -i '/status,domain/d' twa.csv

Some interesting statistics:

70 (7%) of the websites scanned sent a Server header containing version information

(e.g., nginx/1.14.0)14:

| Tag | Count |

|---|---|

| Apache/2 | 30 |

| api-gateway/1.9.3.1 | 4 |

| ATS/7.1.2 | 8 |

| DPS/1.4.21 | 24 |

| Microsoft-IIS/8.5 | 4 |

| nginx/1.1.19 | 2 |

| nginx/1.12.2 | 4 |

| nginx/1.13.6 | 2 |

| nginx/1.14.0 | 48 |

| nginx/1.14.1 | 4 |

| openresty/1.13.6.2 | 10 |

260 (26%) of the websites sent one or more cookies missing either (or both) the

'secure' or 'httpOnly' flags15. The worst offender sent 18 unsecured cookies16!

235 websites redirected HTTP requests to HTTPS using a 301 (or other permanent redirect)17,

while 25 used a 302 (or other temporary redirect)18. 549 websites were serving HTTPS

(confirming the nmap scan), but didn’t redirect their HTTP traffic19. Another 191 websites

redirected their HTTP traffic to another HTTP endpoint20. Thus, without intervention from

a browser extension like HTTPS Everywhere, the average user

will wind up using plain old HTTP on the average .nyc domain. Not great for 2018.

106 (10.6%) of the websites were listening on one or more non-production ports, possibly indicating either a development version of the site or some kind of backend service21.

There are a bunch of other interesting datapoints in twa.csv, but I’ll leave it up to you to dig through and interpret them.

This blog post took longer than I thought it would — I started writing it on the 3rd of November, but many of the scans I used didn’t complete until the 9th. As a result, I limited the amount of analysis that I did on the data. It would be interesting to see someone more statistically inclined than myself take it on.

I’ve published an archive containing some of the

data and tiny scripts I used for this blog post.

I have left out files that contain scan information for any particular domain or domains,

including my copy of the nmap scan. I’d like to share the full data with people interested in

legitimate research — if that applies to you, please contact me directly and we’ll work out

some kind of agreement about usage22.

Where applicable, the footnotes below refers to a specific file or invocations of one of the

scripts in that gist. Note that most of the scripts are ad-hoc, and do

things via stdin/stdout — you should modify them if you need to do anything more

complicated.

There were no duplicates in the dataset, so either all domains are still active from initial purchase or the dataset only indicates the latest owner. ↩

domains.csv and domains.json ↩

For the sake of brevity, I’m going to refer to the IP/CNAME results after this as just “IPs.” ↩

domains2ips < domains.json > ips.jsonl ↩

livesites < ips.jsonl > livesites.jsonl ↩

uniqueips < livesites.jsonl ↩

ipfreqs < livesites.jsonl > ipfreqs.json ↩

topips < ipfreqs.json > topips.json ↩

nmap2ports < nmap.txt > ports.jsonl ↩

sshversions < ports.jsonl > sshversions.jsonl ↩

ftpversions < ports.jsonl > ftpversions.jsonl ↩

randomhttps < ports.jsonl > https.txt ↩

The rationale here was simple: HTTP is fundamentally insecure, so there’s no point in checking for best practices on a website that doesn’t support any secure channel. ↩

grep 'looks like a version tag' < twa.csv | sed 's/.* version tag: \(.*\)\".*/\1/p' | sort | uniq -c ↩

grep -E 'FAIL,.*cookie' < twa.csv | sed 's/FAIL,\(.*\.nyc\).*/\1/p' | sort | uniq | wc -l ↩

grep -E 'FAIL,.*cookie' < twa.csv | sed 's/FAIL,\(.*\.nyc\).*/\1/p' | sort | uniq -c | sort -rn ↩

grep "HTTP redirects to HTTPS using a 30[18]" < twa.csv | wc -l ↩

grep "HTTP redirects to HTTPS using a 30[27]" < twa.csv | wc -l ↩

grep "HTTP doesn't redirect at all" < twa.csv | wc -l ↩

grep "HTTP redirects to HTTP (not secure)" < twa.csv | wc -l ↩

grep "is listening on a development/backend port" < twa.csv | sed 's/FAIL,\(.*\.nyc\).*/\1/p' | sort | uniq | wc -l ↩

This is a harm reduction thing, not a legal thing. There’s no security in the herd, but I’d rather not be personally responsible for attackers targeting some of the hosts that I identified. ↩