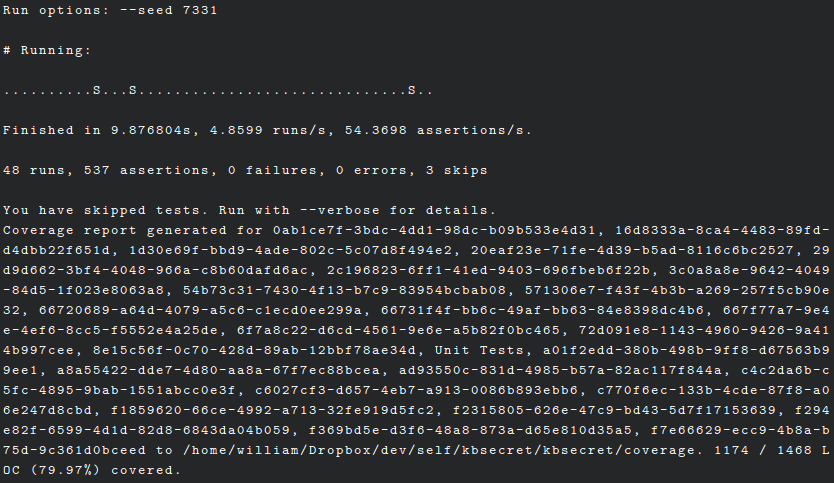

Each UUID above is a separate process.

Each UUID above is a separate process.Apr 1, 2018 Tags: devblog, kbsecret, programming, ruby

This post is at least a year old.

This is a short writeup of how I got SimpleCov coverage

reports working across multiple Ruby processes, specifically when created through

Kernel#fork.

As part KBSecret 1.3 (soon to be released!), I’ve significantly refactored the way in which KBSecret

executes commands (e.g., kbsecret list and kbsecret new) as part of a larger effort to simplify

the codebase and improve performance.

KBSecret now executes commands “in-process,” meaning that it does not exec or otherwise spawn a

fresh Ruby interpreter to handle the command. This has two important consequences:

require most of the heavy libraries (keybase-unofficial

and KBSecret itself).However, KBSecret commands still behave as if they’re in complete control of the process —

they call exit and abort on error conditions, fiddle with I/O, and do all sorts of other things.

This makes testing difficult, especially when the tests are of error conditions — calling

exit in the command takes the entire test harness down with it.

fork and pipesfork is the conceptually simple solution to the problem of testing programs that terminate or

otherwise modify the process state. Ruby even provides a nice Kernel#fork method that takes a

block:

1

2

3

4

5

6

7

8

9

10

# BAD! This will take down the test harness if the command decides to exit.

KBSecret::CLI::Command.run! cmd, *args

# GOOD! The command's termination has no (direct) impact on the test harness.

fork do

KBSecret::CLI::Command.run! cmd, *args

end

# We want to make sure our forked process finishes before we test its state.

Process.wait

However, fork comes with its own challenges — now that we’re in a separate (child) process,

we no longer have direct access to the child’s standard I/O descriptors. Since commands communicate

with the user through stdin, stdout, and stderr, we’ll need to introduce a pipe for each:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

def kbsecret(cmd, *args, input: "")

pipes = {

stdin: IO.pipe,

stdout: IO.pipe,

stderr: IO.pipe,

}

# Send our input into the write-end of our stdin pipe, for the child to read.

pipes[:stdin][1].puts input

fork do

# Child: close those pipe ends we don't need.

pipes[:stdin][1].close

pipes[:stdout][0].close

pipes[:stderr][0].close

# Reassign the child's global standard I/O handlers to point to our pipes.

$stdin = pipes[:stdin][0]

$stdout = pipes[:stdout][1]

$stderr = pipes[:stderr][1]

# ...and run the command.

KBSecret::CLI::Command.run! cmd, *args

end

# Parent: close those pipe ends we don't need.

pipes[:stdin][0].close

pipes[:stdin][1].close

pipes[:stdout][1].close

pipes[:stderr][1].close

# Wait for our child to finish.

Process.wait

# Finally, return the contents of the child's stdout and stderr streams for testing.

[pipes[:stdout][0].read, pipes[:stderr][0].read]

end

This works as expected:

1

2

3

4

5

6

>> # a command that runs normally

>> kbsecret "version"

=> ["kbsecret version 1.3.0.pre.3.\n", ""]

>> # a command that terminates via `exit` due to a bad flag

>> kbsecret "list", "-z"

=> ["", "\e[31mFatal\e[0m: Unknown option `-z'.\n"]

So far, we have commands running in their own processes for the purposes of resiliency/testing failure conditions. That’s cool, but what we ultimately want is coverage statistics from those child processes. How do we get there?

Well, because we’re using fork, our child processes share the same library context

as their parents. That means that anything we get a copy of anything required or loaded

pre-fork, including SimpleCov’s state.

To take advantage of this, we need to modify our coverage preamble slightly, from something like this:

1

2

3

4

if ENV["COVERAGE"]

require "simplecov"

SimpleCov.start

end

to this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

if ENV["COVERAGE"]

require "simplecov"

# Only necessary if your tests *might* take longer than the default merge

# timeout, which is 10 minutes (600s).

SimpleCov.merge_timeout(1200)

# Store our original (pre-fork) pid, so that we only call `format!`

# in our exit handler if we're in the original parent.

pid = Process.pid

SimpleCov.at_exit do

SimpleCov.result.format! if Process.pid == pid

end

# Start SimpleCov as usual.

SimpleCov.start

end

We also need to add a tiny bit of code to our fork block:

1

2

3

4

5

6

7

8

9

10

fork do

if ENV["COVERAGE"]

# Give our new forked process a unique command name, to prevent problems

# when merging coverage results.

SimpleCov.command_name SecureRandom.uuid

SimpleCov.start

end

# Same as the fork-block code above...

end

And ta-da, multi-process coverage reports:

Each UUID above is a separate process.

Each UUID above is a separate process.

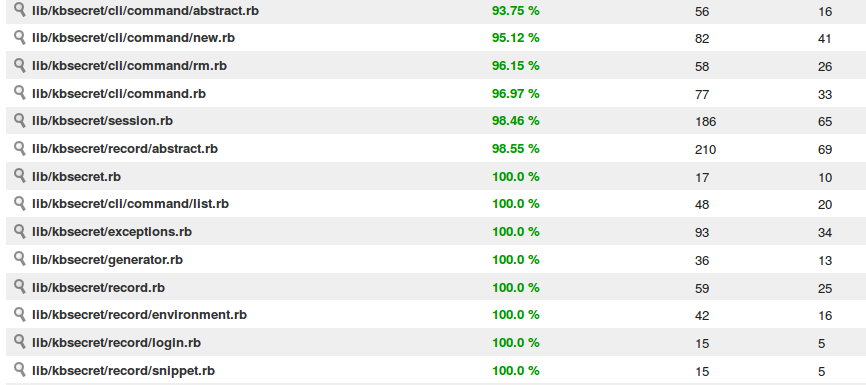

command/new.rb, command/list.rb, and command/rm.rb are all tested under separate processes.

This technique works great locally, but not so great on remote services like Codecov. To get properly merged multi-process coverage results on Codecov, you’ll need to do some additional post-processing.

Here’s an example rake task:

1

2

3

4

5

6

7

8

desc "Upload coverage to codecov"

task :codecov do

require "simplecov"

require "codecov"

formatter = SimpleCov::Formatter::Codecov.new

formatter.format(SimpleCov::ResultMerger.merged_result)

end

This handles uploading to Codecov, so there’s no need to require "codecov" in your helper.rb

or equivalent file.

Thus, the complete workflow:

1

2

3

4

# Run unit tests with code coverage enabled.

$ COVERAGE=1 bundle exec rake test

# Stitch the previous results together and send the merged result to Codecov.

$ bundle exec rake codecov

Check out KBSecret’s repository for a working example.

Thanks for reading!